|

|

|

|

Did you see that photo of Pope Francis rocking a puffy white designer coat? Maybe you also saw headlines informing you that the pontiff’s styling was entirely the creation of an artificial intelligence image generator. The problem is, the image went viral and many people took it at face value, and not everyone who saw the image subsequently read the headlines.

Fake images of celebrities might not seem very consequential, but the potential of generative AI for deeply harmful fraud and misinformation is readily apparent. These AI models are so powerful in part because they are trained on vast amounts of text and images on the internet, which also raises issues of intellectual property protections and data privacy.

People will soon be interacting with these AI systems in myriad ways, from using them to search the web and write emails to developing relationships with them. Not surprisingly, there’s a growing movement for government to regulate the technology. It’s not at all clear, however, how to do so.

Three experts on technology policy, Penn State’s S. Shyam Sundar, Texas A&M’s Cason Schmit and UCLA’s John Villasenor, provide different perspectives on the challenges to building guardrails along the road to our brave new AI future.

Also today:

|

|

Eric Smalley

Science + Technology Editor

|

|

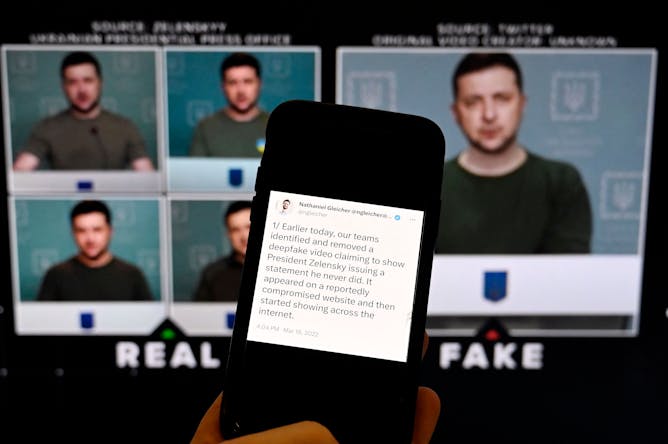

The new generation of AI tools makes it a lot easier to produce convincing misinformation.

Photo by Olivier Douliery/AFP via Getty Images

S. Shyam Sundar, Penn State; Cason Schmit, Texas A&M University; John Villasenor, University of California, Los Angeles

Powerful new AI systems could amplify fraud and misinformation, leading to widespread calls for government regulation. But doing so is easier said than done and could have unintended consequences.

|

Politics + Society

|

-

Raquel Aldana, University of California, Davis

‘Extensive use’ of detention led to tragic fire, according to the UN special rapporteur for migrant rights. US-Mexico policy has fueled the growth.

-

Jeffrey Bellin, William & Mary Law School

The Manhattan District Attorney will need to prove several different points in its prosecution of Trump. But securing an unbiased jury will also challenge the execution of this unprecedented case.

-

James D. Long, University of Washington; Morgan Wack, University of Washington; Victor Menaldo, University of Washington

Criminal charges against former President Donald Trump for his role in the Jan. 6 Capitol riot could spark political consequences – not only for Trump, but for US democracy.

|

|

Science + Technology

|

-

Matthew G. Hill, Iowa State University

Researchers are analyzing the fossil cranium of a Smilodon fatalis that lived more than 13,000 years ago to learn more about the lifestyle of this iconic big cat.

-

John F. Tooker, Penn State; Daniel Bliss, Penn State; Jared Adam, Penn State

These members of the mollusk family may be slow, small and slimy, but they are an indispensable part of the ecosystem.

|

|

Arts + Culture

|

-

Marco Dehnert, Arizona State University; Joris Van Ouytsel, Arizona State University

Early research finds that people get just about the same gratification from sexting with a chatbot as they do with another human.

|

|

Environment + Energy

|

-

Vivian R. Underhill, Northeastern University; Lourdes Vera, University at Buffalo

Fracking for oil and gas uses millions of pounds of chemicals, some of which are toxic or carcinogenic. Two researchers summarize what companies have disclosed and call for more transparency.

|

|

Education

|

-

Manil Suri, University of Maryland, Baltimore County

Nearly four decades after President Ronald Reagan proclaimed the first National Math Awareness Week, math readiness and enrollment in college math programs continue to decline.

|

|

Health + Medicine

|

-

William Robertson, University of Memphis

Though some LGBTQ+ health care providers may try to separate their personal and professional identities, the prejudice they experience highlights their queerness in the clinic.

|

|

|

|

|

|

Reader Comments 💬 |

|---|

“For domestic architecture, an unexplored property of free form curved walls and ceilings is acoustic reflections. Compared to the acoustic environment of flat wall rectangular rooms, sound within spaces bound by complex concave and convex curved walls will focus and disperse differently, maybe step by step as you walk through a room or passageway. ”

– Reader Stewart Kaplan on the story 3D printing promises to transform architecture forever – and create forms that blow today’s buildings out of the water -

More of The ConversationLike this newsletter? You might be interested in our weekly and biweekly emails: Trying out new social media? Follow us: -

About The ConversationWe're a nonprofit news organization dedicated to helping academic experts share ideas with the public. We can give away our articles thanks to the help of foundations, universities and readers like you.

|

|

| |

| |

| |

|

| |

| |

| |

| |

| |

| |

|

|

|

|

|

|

|